Sensors#

The sensors module in c4dynamics provides

physical and vision-based sensor models for simulating real-world measurements.

It encompasses a variety of sensing modalities, from physical measurements to computer vision. This gives simulated agents the “eyes and ears” they need to perceive and interpret the environment.

This module is designed to be flexible and extensible, allowing you to integrate multiple sensor types into your simulations while maintaining consistent interfaces for data acquisition and processing.

This section includes three main components:

YOLOv3 Class#

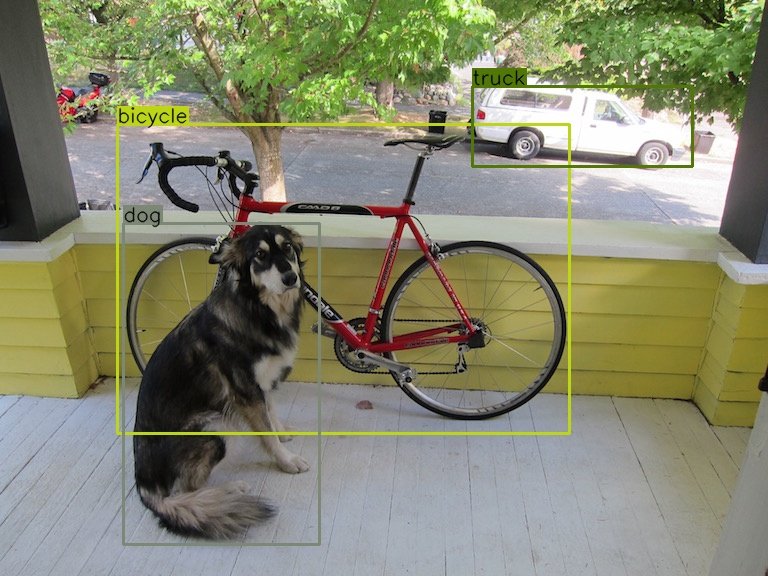

A real-time object detection interface based on the YOLOv3 architecture. It provides bounding boxes, class predictions, and confidence scores, enabling simulated agents to perceive and classify visual elements in their environment.

Using YOLOv3 means object detection capability with the 80 pre-trained classes that come with the COCO dataset.

The following 80 classes are available using COCO’s pre-trained weights:

COCO dataset

person, bicycle, car, motorcycle, airplane, bus, train, truck, boat, traffic light, fire hydrant, stop sign, parking meter, bench, bird, cat, dog, horse, sheep, cow, elephant, bear, zebra, giraffe, backpack, umbrella, handbag, tie, suitcase, frisbee, skis,snowboard, sports ball, kite, baseball bat, baseball glove, skateboard, surfboard, tennis racket, bottle, wine glass, cup, fork, knife, spoon, bowl, banana, apple, sandwich, orange, broccoli, carrot, hot dog, pizza, donut, cake, chair, couch, potted plant, bed, dining table, toilet, tv, laptop, mouse, remote, keyboard, cell phone, microwave, oven, toaster, sink, refrigerator, book, clock, vase, scissors, teddy bear, hair drier, toothbrush

Figure 1: Object Detection with YOLO using COCO pre-trained classes ‘dog’, ‘bicycle’, ‘truck’. Read more at: darknet-yolo.

Seeker Class#

Models a generic seeker sensor used in guidance and tracking simulations. It measures the azimuth and elevation angles through an error model, simulating how onboard seekers detect and track targets.

Functionality

At each time step, the seeker returns measurements based on the true geometry relative to the target.

Let the relative coordinates in an arbitrary frame of reference:

The relative coordinates in the seeker body frame are given by:

where \([BR]\) is a Body from Reference DCM (Direction Cosine Matrix) formed by the seeker three Euler angles. See the rigidbody section below.

The azimuth and elevation measures are then the spatial angles:

Where:

\(az\) is the azimuth angle

\(el\) is the elevation angle

\(x_b\) is the target-radar position vector in radar body frame

Fig-1: Azimuth and elevation angles definition#

Radar Class#

Simulates a configurable radar sensor, producing measurements such as range, azimuth, and elevation. As a subclass of Seeker, radar measurements are passed through an error model to simulate real-world sensor imperfections.

Radar vs Seeker

The following table

lists the main differences between

seeker and radar

in terms of measurements and

default error parameters:

Angles |

Range |

\(σ_{Bias}\) |

\(σ_{Scale Factor}\) |

\(σ_{Angular Noise}\) |

\(σ_{Range Noise}\) |

|

|---|---|---|---|---|---|---|

Seeker |

✔️ |

❌ |

\(0.1°\) |

\(5%\) |

\(0.4°\) |

\(--\) |

Radar |

✔️ |

✔️ |

\(0.3°\) |

\(7%\) |

\(0.8°\) |

\(1m\) |

Whether you are simulating an autonomous vehicle, a missile guidance loop, or a robotic system, the Sensors module gives your models the “eyes and ears” they need to interact with the dynamic world.